I am currently a second-year PhD student at National Taiwan University, supervised by Prof. Hung-yi Lee, in the Speech Processing and Machine Learning Lab. My research interests include speech foundation models, spoken language models, model compression, neuron analysis, and model merging. I am eager to explore new research areas and am currently looking for research internships for the year 2026. If there are any possibilities for research collaboration, please feel free to contact me.

🔥 News

- 2025.11: 🎉🎉 Journal accepted at IEEE Transactions on Audio, Speech and Language Processing (TASLP)!

- 2025.08: 🎉🎉 One paper accepted at APSIPA ASC 2025 main conference track. See you in Singapore 🇸🇬!

- 2025.08: 🎉🎉 One paper accepted at ASRU 2025 main conference track. See you in Hawaii 🇺🇸🥥🌴!

- 2024.09: 🎉🎉 Two papers accepted at SLT 2024 main conference track. See you in Macao 🇲🇴!

- 2024.06: 🎉🎉 Two papers accepted at Interspeech 2024. See you in Greece 🇬🇷!

- 2023.09: 🎉🎉 One paper accepeted at ASRU 2023.

📝 Publications

Tzu-Quan Lin, Wei-Ping Huang, Hao Tang, Hung-yi Lee

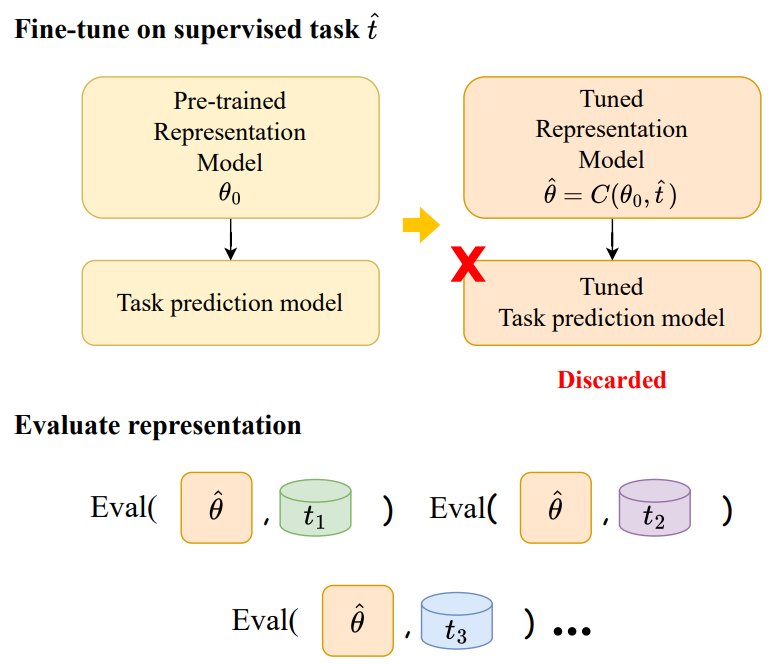

- Speech-FT is a two-stage fine-tuning framework designed for speech representation learning. It improves performance on specific tasks while maintaining cross-task generalization ability.

- Speech-FT improves HuBERT’s performance on SUPERB by reducing phone error rate from 5.17% to 3.94%, lowering word error rate from 6.38% to 5.75%, and boosting speaker ID accuracy from 81.86% to 84.11%.

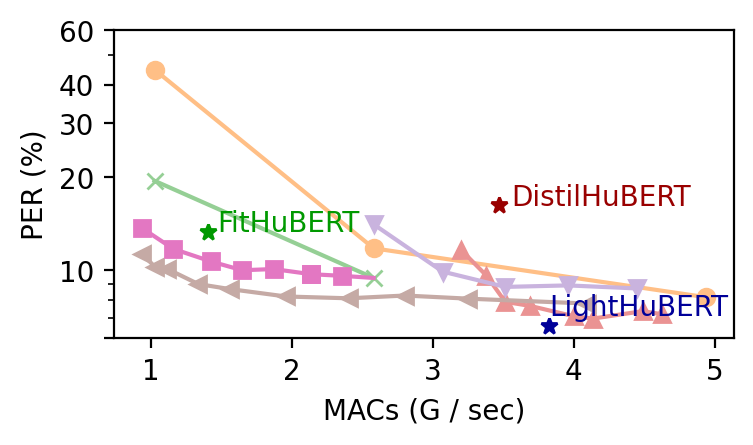

Is Smaller Always Faster? Tradeoffs in Compressing Self-Supervised Speech Transformers

Tzu-Quan Lin, Tsung-Huan Yang, Chun-Yao Charly registrationang, Kuang-Ming Chen, Tzu-hsun Feng, Hung-yi Lee, Hao Tang

- This work propose evaluating model compression methods using three different metrics: MACs, number of parameters, and real-time factor. We find that different compression methods excel in different metrics.

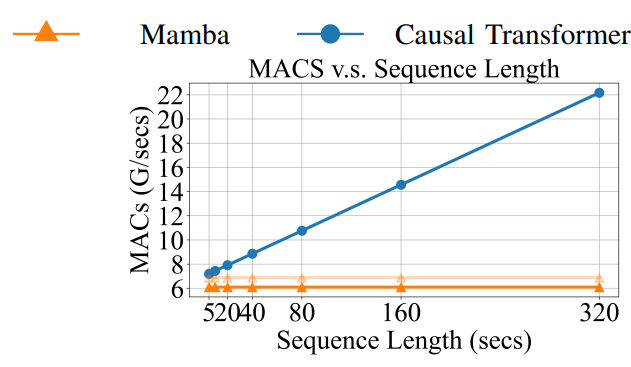

An Exploration of Mamba for Speech Self-Supervised Models

Tzu-Quan Lin, Heng-Cheng Kuo, Tzu-Chieh Wei, Hsi-Chun Cheng, Chun-Wei Chen, Hsien-Fu Hsiao, Yu Tsao, Hung-yi Lee

- This work explores Mamba-based HuBERT as a speech SSL model, showing its advantages in long-context and streaming ASR, improved speech unit quality, and competitive performance on probing tasks compared to Transformer-based models.

Identifying Speaker Information in Feed-Forward Layers of Self-Supervised Speech Transformers

Tzu-Quan Lin, Hsi-Chun Cheng, Hung-yi Lee, Hao Tang

- This work identifies speaker-relevant neurons in self-supervised speech Transformers and shows that preserving them during pruning helps maintain performance on speaker-related tasks.

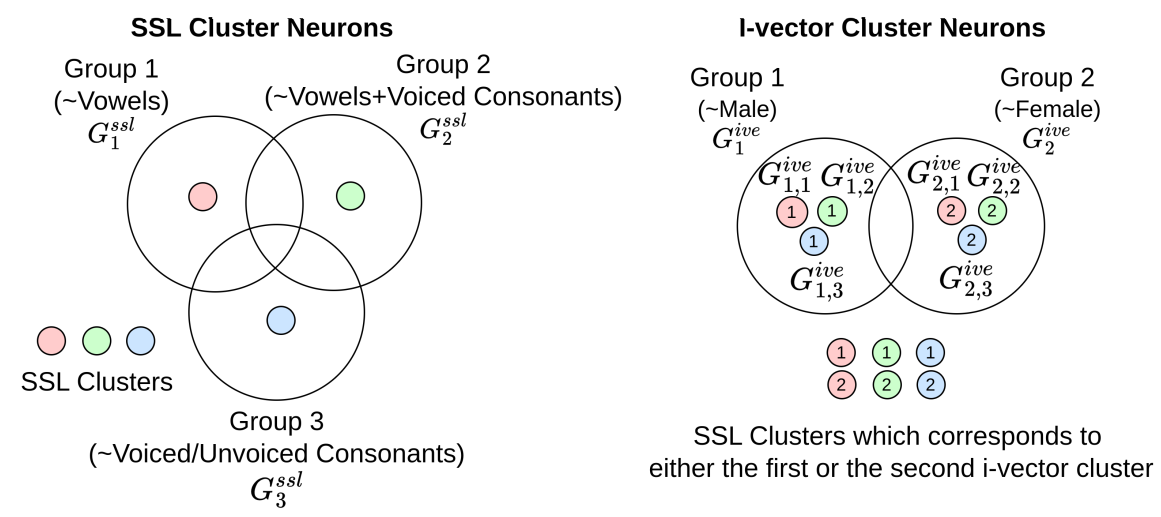

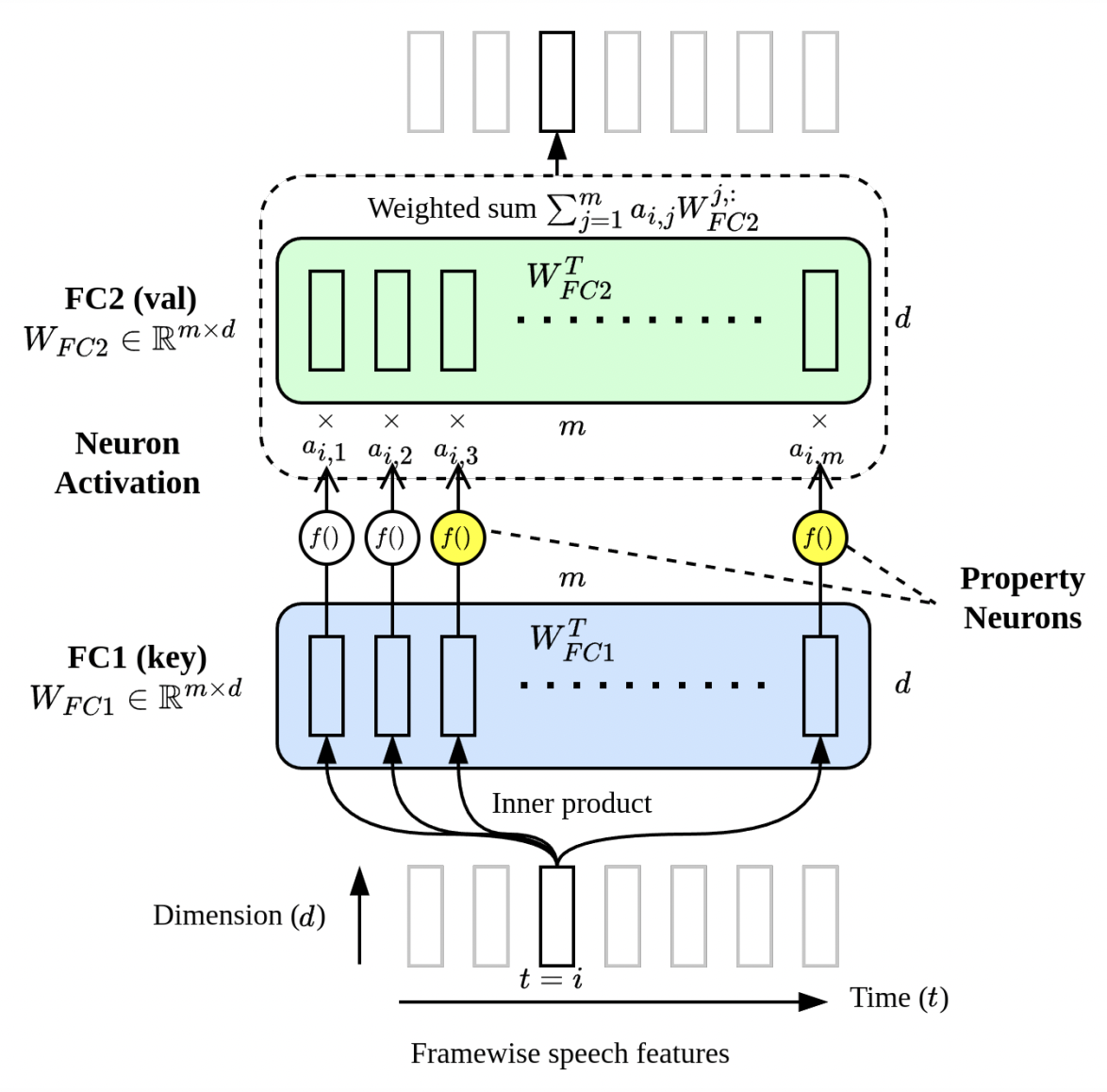

Property Neurons in Self-Supervised Speech Transformers

Tzu-Quan Lin, Guan-Ting Lin, Hung-yi Lee, Hao Tang

- In this work, we identify a set of property neurons in the feedforward layers of Transformers to study how speech-related properties, such as phones, gender, and pitch, are stored.

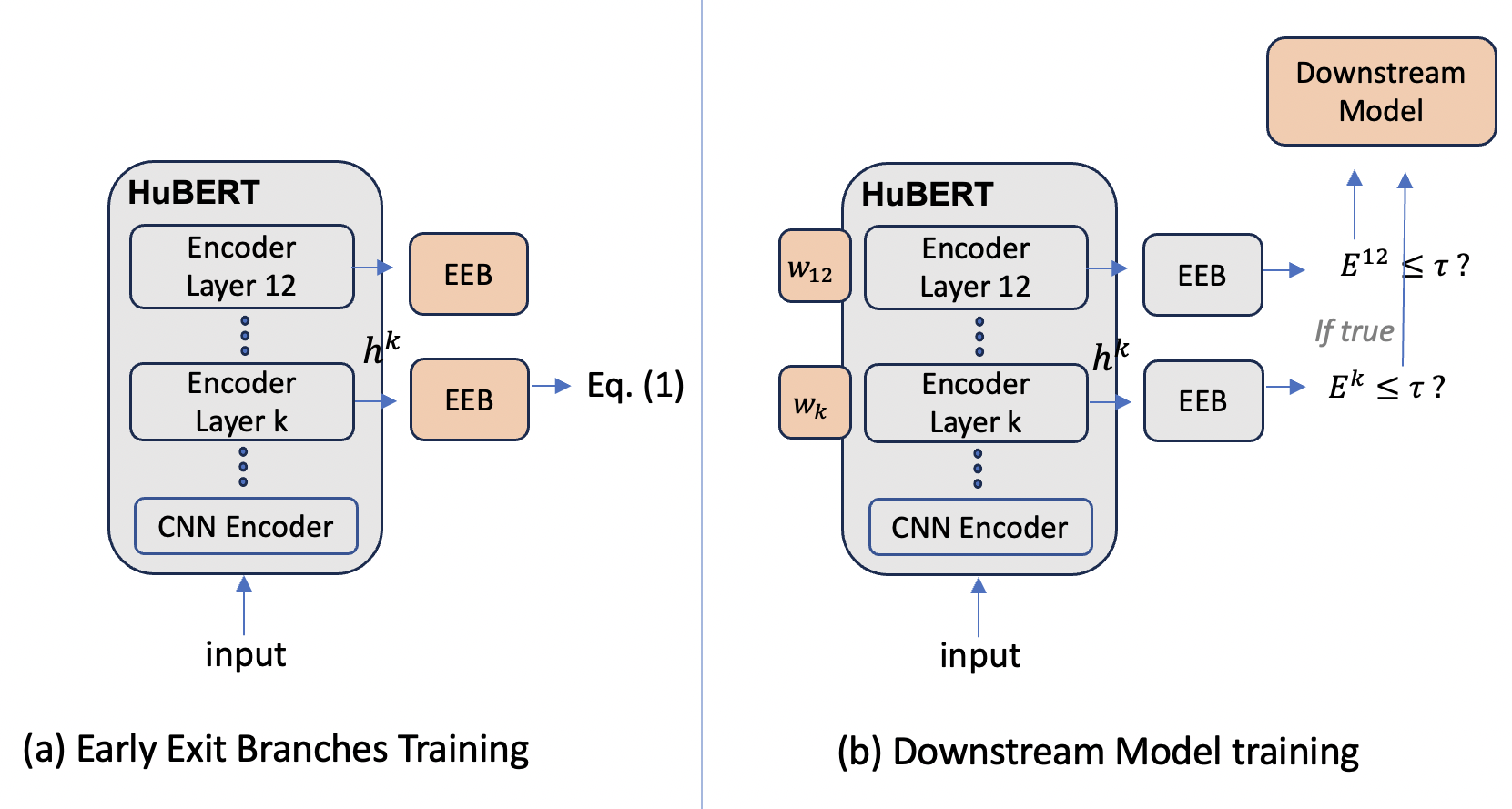

DAISY: Data Adaptive Self-Supervised Early Exit for Speech Representation Models

Tzu-Quan Lin, Hung-yi Lee, Hao Tang

- This work introduces a novel early exit method for speech self-supervised models that enhances the speed of HuBERT with minimal performance loss.

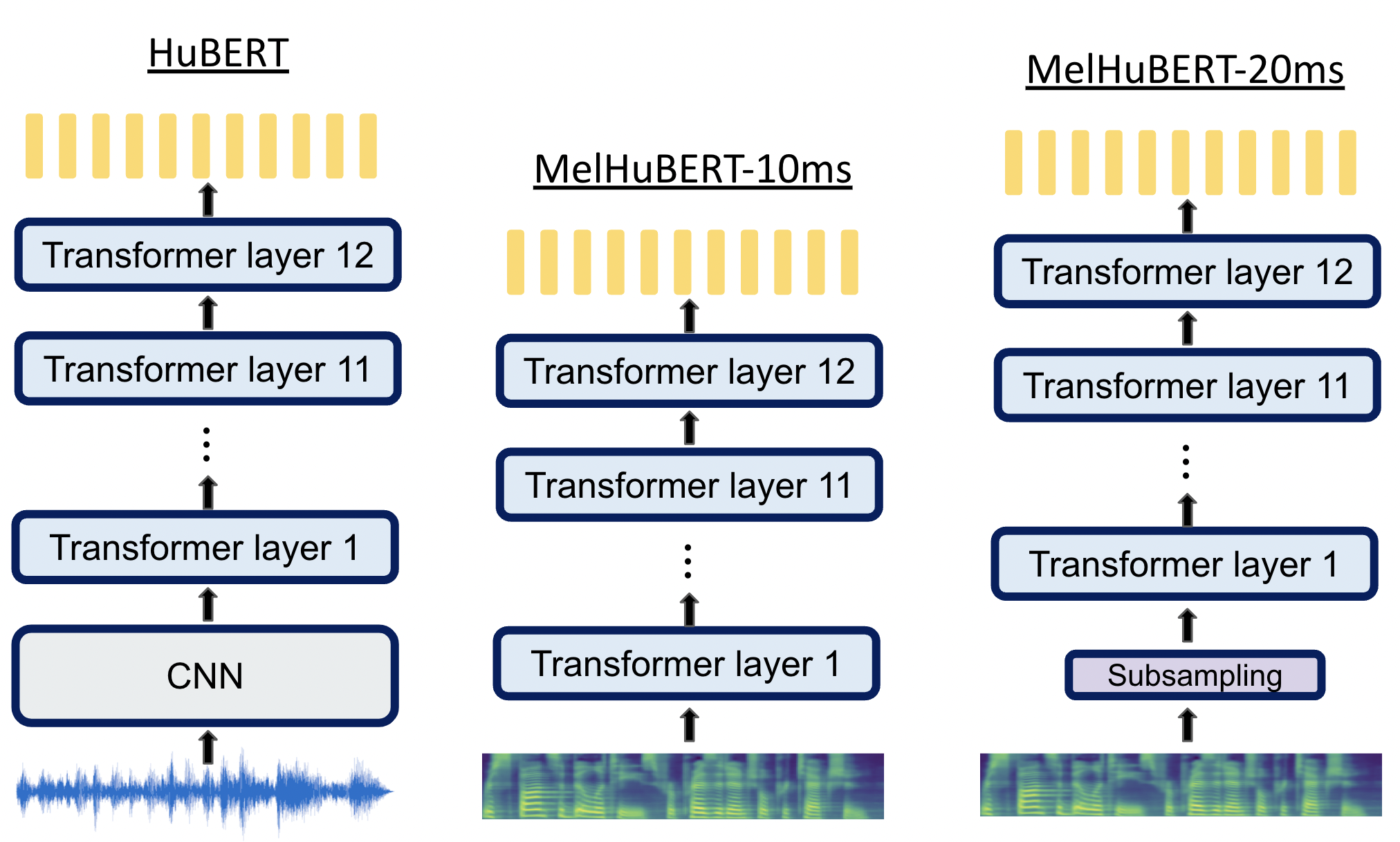

MelHuBERT: A Simplified Hubert on Mel Spectrograms

Tzu-Quan Lin, Hung-yi Lee, Hao Tang

- MelHuBERT simplifies the model architecture and loss function of HuBERT, achieving comparable performance while saving 33.5% of MACs per one second of speech.

- Listen and Speak Fairly: A Study on Semantic Gender Bias in Speech Integrated Large Language Models, Yi-Cheng Lin, Tzu-Quan Lin, Chih-Kai Yang, Ke-Han Lu, Wei-Chih Chen, Chun-Yi Kuan, Hung-yi Lee. SLT 2024

- On the social bias of speech self-supervised models, Yi-Cheng Lin, Tzu-Quan Lin, Hsi-Che Lin, Andy t. Liu, Hung-yi Lee. Interspeech 2024, Best Paper Runner-up in Special Session

- Superb @ SLT 2022: Challenge on Generalization and Efficiency of Self-Supervised Speech Representation Learning, Tzu-hsun Feng, Annie Dong, Ching-Feng Yeh, Shu-wen Yang, Tzu-Quan Lin, Jiatong Shi, et al.. SLT 2022, Best Paper Finalists

📖 Educations

- 2024.07 - now, PhD in Electrical, Electronics, Communications Engineering (EE), Data Science and Smart Networking, National Taiwan University

- 2022.07 - 2024.06, Master in CSIE, Networking and Multimedia, National Taiwan University

- 2018.09 - 2022.06, Bachelor in Department of Computer Science and Information Engineering (CSIE), National Taiwan University

🏆 Honors and Awards

- Interspeech 2024 Travel Grant

💻 Internships

- 2021.07 - 2021-09, aetherAI, Taipei, Taiwan.